Ergonomic Human-Robot Collaboration

Human-robot collaboration has immense potential to improve workplace ergonomics, i.e., a human worker’s productivity and well-being. There is a significant need for this research because conventional machines are a major cause of injury to workers in industrial settings and a leading cause of absence from work.

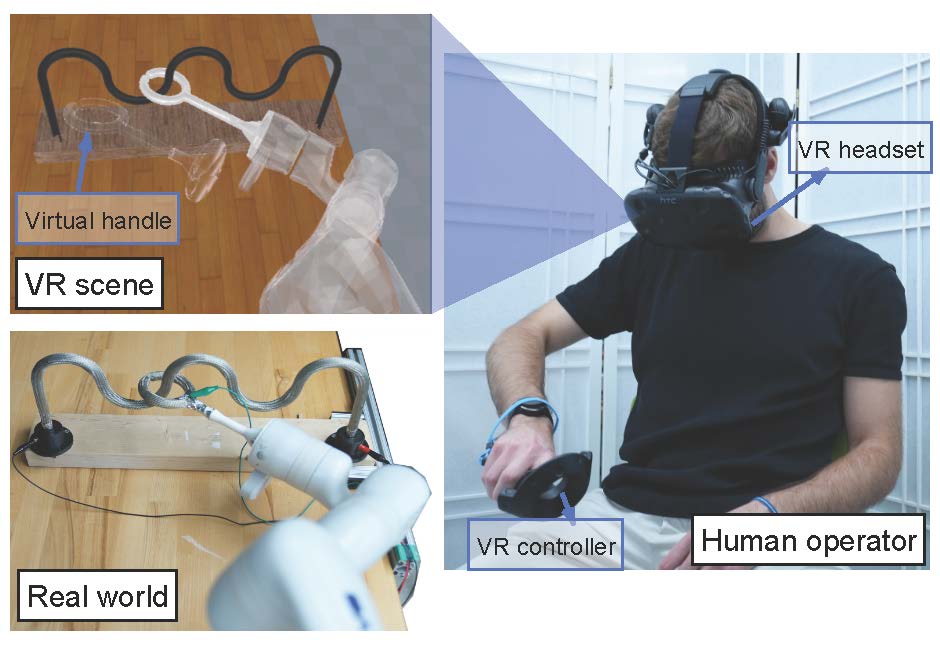

To advance this research direction, my colleagues and I presented a framework to quantify ergonomics in human-robot collaboration based on body posture [1]. We developed a robot-to-human handover architecture, where 15 lbs packages were exchanged between a robot and human to mimic typical industrial tasks with workers carrying loads in repetitive motion. Our proposed system estimated the ergonomic score of a human’s posture when receiving these packages using an external camera. The external camera recognized the receiver’s joints and used a standardized assessment scale to produce a measure of their ergonomic state, e.g., based on trigonometric angles between these joints. In response to this ergonomics score, the robot would then choose different locations in 3D space to transfer packages, with the aim of “stimulating” the receiving person’s dynamics. This “stimulating” handover policy improved ergonomics scores over standard approaches to robot-to-human handover.

References

[1] M. Zolotas, R. Luo, S. Bazzi, D. Saha, K. Mabulu, K. Kloeckl, and T. Padır, “Productive Inconvenience: Facilitating Posture Variability by Stimulating Robot-to-Human Handovers”, IEEE International Conference on Robot and Human Interactive Communication, 2022.